Executive summary – what changed and why it matters

Interface, a San Francisco startup, is using large language models to autonomously audit industrial standard operating procedures (SOPs) by cross‑checking them against regulations, technical drawings, and corporate policies. Deployed at multiple energy sites, it reports finding 10,800 errors in 2.5 months and has signed seven‑figure annual contracts (one customer contract cited at >$2.5M/year); the company raised a $3.5M seed round this year.

This is not just a press release about a young founder: if the method scales and holds up in validation, it can compress multi‑year safety documentation programs into months, lower audit costs dramatically, and reduce incident risk – but it also raises governance, liability, and integration challenges that operators must treat as first‑order concerns.

Key takeaways

- Impact: LLM‑driven auditing can surface thousands of latent errors quickly – Interface claims 10,800 issues in 2.5 months and estimates manual remediation would have cost >$35M and taken 2-3 years.

- Commercial: Interface uses a hybrid per‑seat model with overage fees after rejecting pure outcome‑based pricing; a single deployment is valued at >$2.5M annually.

- Why now: LLM maturity + aging institutional knowledge + regulatory pressure create a high‑value window for fast documentation audits in heavy industry.

- Risks: hallucination, false positives/negatives, integration gaps with CMMS/ERP, data security of drawings, and unclear liability if automated edits are adopted operationally.

Breaking down the capability and evidence

Interface combines large language models with domain inputs — regulations, pipeline schematics, valve specifications, and company policy — to autonomously parse SOPs and flag inconsistencies or unsafe instructions. The public evidence in this piece: deployment across three sites for a major Canadian energy firm, the 10,800‑issue figure, and pricing/contract size claims. Team size is small (eight employees) and the product is early‑stage but revenue‑generating.

Quantified claims matter: Interface says a manual review would cost >$35M and take years; the AI approach reportedly compresses that to months at a fraction of the cost and has already produced sizable contracts. Those savings are plausible if the flagged issues are real, actionable, and accepted by operations teams.

What this changes for operators and buyers

Short term: operators can accelerate SOP audits and prioritize high‑risk fixes without sending dozens of technicians to comb documents. Time‑to‑value can shrink from years to weeks/months, enabling faster regulatory response and remediation.

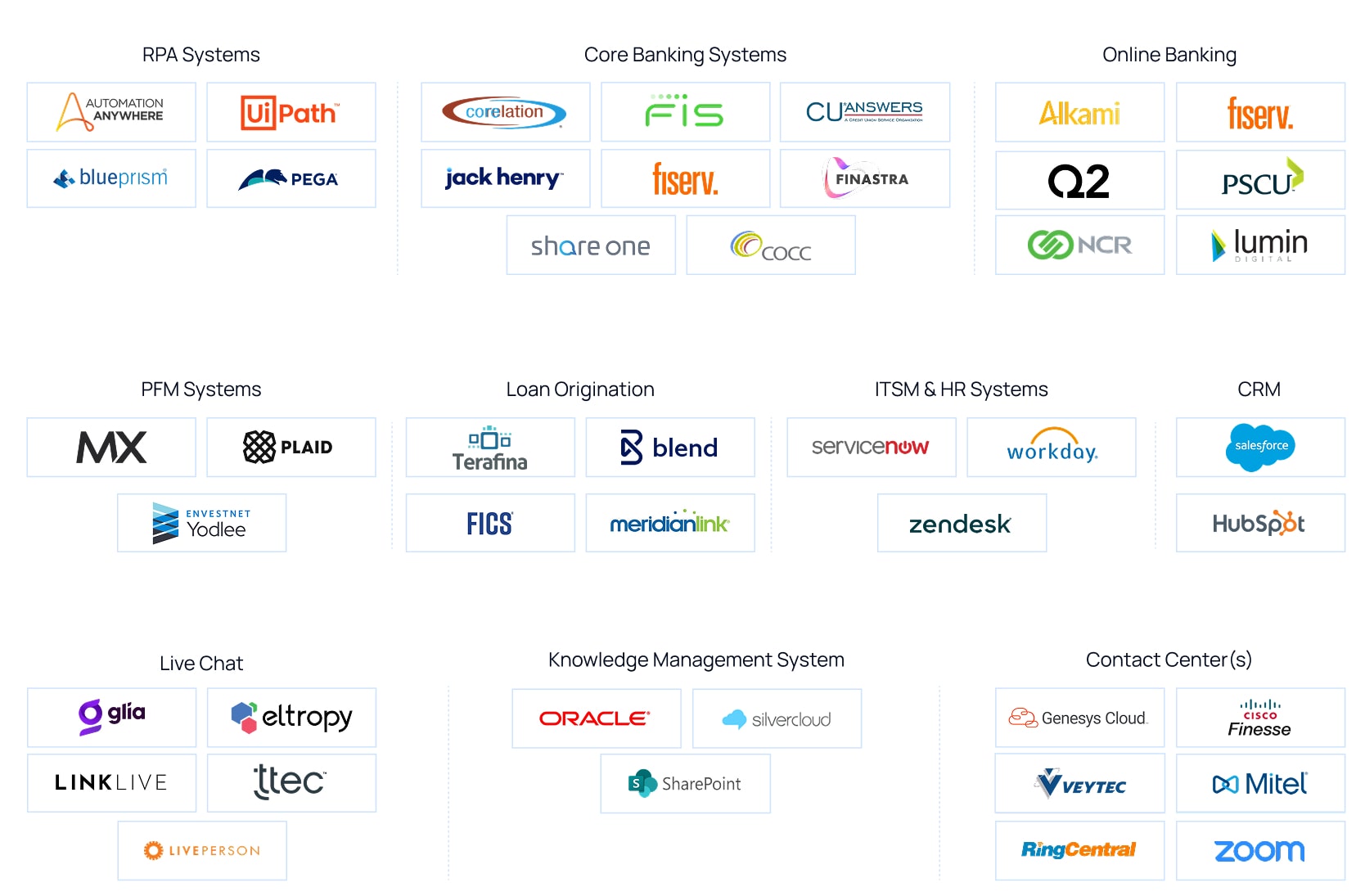

Procurement signals: buyers should treat LLM vendors as engineering partners, not point‑tools. Successful adoption requires integration into configuration management, CMMS/ERP systems, and existing change‑control workflows so suggested edits become traceable, reviewable changes.

Competitive and market context

This approach sits between legacy manual audits and incumbent industrial software providers (e.g., traditional EHS suites, digital‑twin vendors such as AVEVA, Siemens, Honeywell) that focus on process control and asset management. Interface’s differentiator is quick semantic cross‑checks against heterogeneous documents using LLMs. Incumbents have deeper systems integration and long vendor relationships — expect them to add LLM capabilities or partner with startups.

Risks, governance, and validation points

- Accuracy and hallucination: LLMs can produce plausible but incorrect suggestions. Operators must validate a measured false‑positive/negative rate against a human gold standard before operationalizing edits.

- Liability: clarify contract language for incorrect recommendations and require indemnities or insurance for safety‑critical failures.

- Auditability: insist on immutable audit logs, versioning, and traceable evidence linking suggested changes to original source documents.

- Data security: industrial drawings and schematics are sensitive IP and potential export‑controlled data; require encryption, local deployment options, or air‑gapped processing.

- Change management: workers distrust bad software. Keep humans in the sign‑off loop and pilot with front‑line buy‑in.

Recommendations — what executives and product leads should do next

- Run a short, scoped pilot (6-12 weeks) on a small set of safety‑critical SOPs, side‑by‑side with manual review. Define acceptance metrics (precision, recall, time saved, and remediation cost avoided).

- Require explainability and audit trails from the vendor; mandate human sign‑off on any procedure changes and integrate outputs into your change‑control and CMMS processes.

- Demand security guarantees: on‑prem or VPC deployment, encrypted storage, and clear data‑use/retention policies — include liability and warranty clauses for safety‑critical failures.

- Prepare ops and training: allocate engineering review capacity and frontline engagement to vet suggested changes and build trust.

Bottom line

Interface’s early results are striking and point to a practical application of LLMs that could materially reduce audit time and cost for heavy industry. But the headline numbers are only a start: buyers must validate accuracy, secure sensitive inputs, and lock down governance before relying on autonomous recommendations in safety‑critical workflows. For operators, the sensible path is a fast, measured pilot that proves value and surfaces integration and liability issues before scaling.