Lead: What changed and why it matters

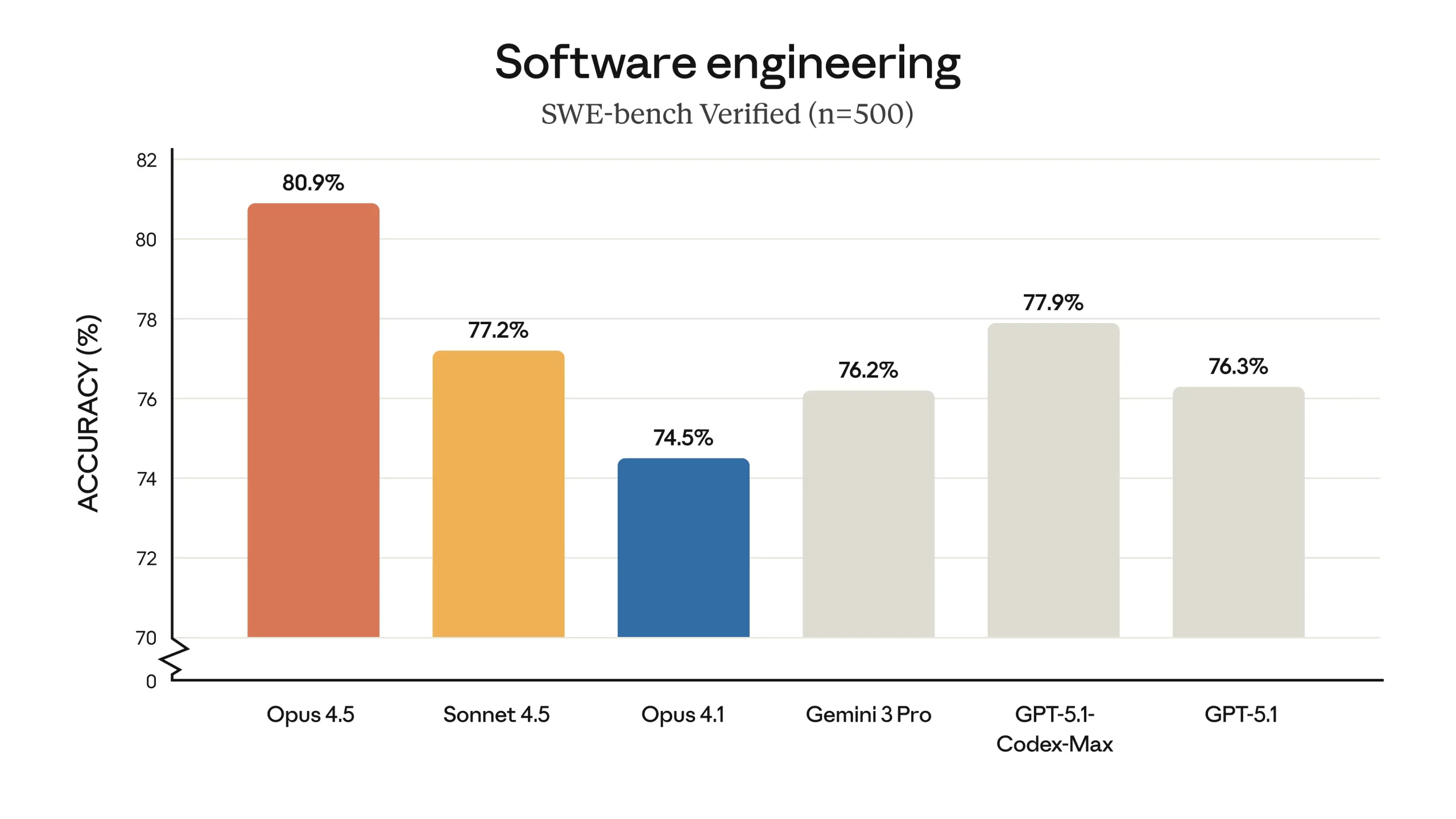

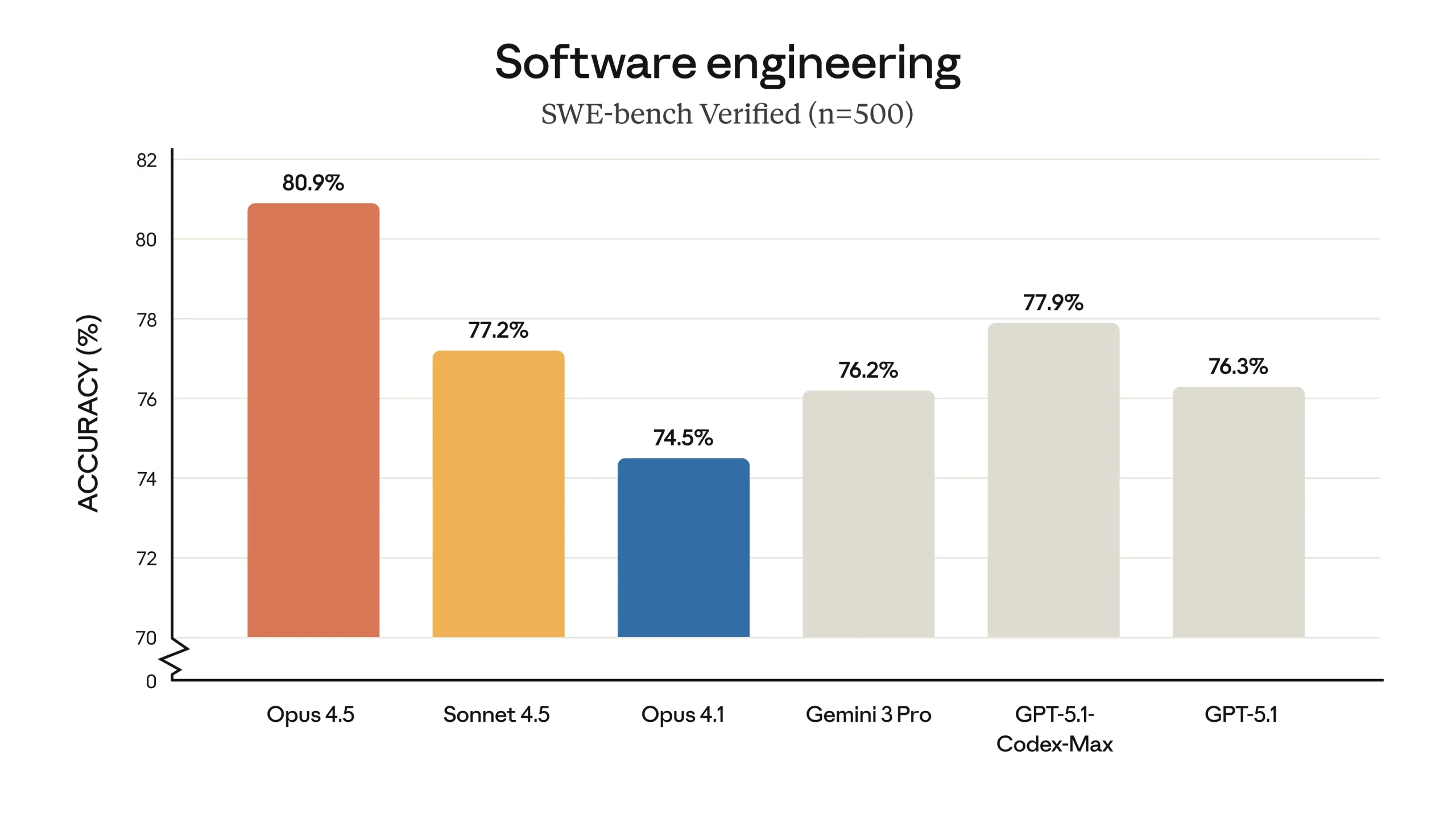

Anthropic released Claude Opus 4.5, the final model in its 4.5 family, and it shifts the operational baseline for enterprise AI: Opus 4.5 is the first model to pass 80% on SWE‑Bench verified, adds meaningful memory and long‑context improvements (enabling what Anthropic calls “endless chat”), and expands integrated tool use into Google Chrome and Microsoft Excel for paid customers.

In plain terms: teams can run higher‑quality coding and agentic workflows directly inside browsers and spreadsheets with lower token costs and longer context windows – but with Level‑3 safety controls and new governance obligations.

Key takeaways

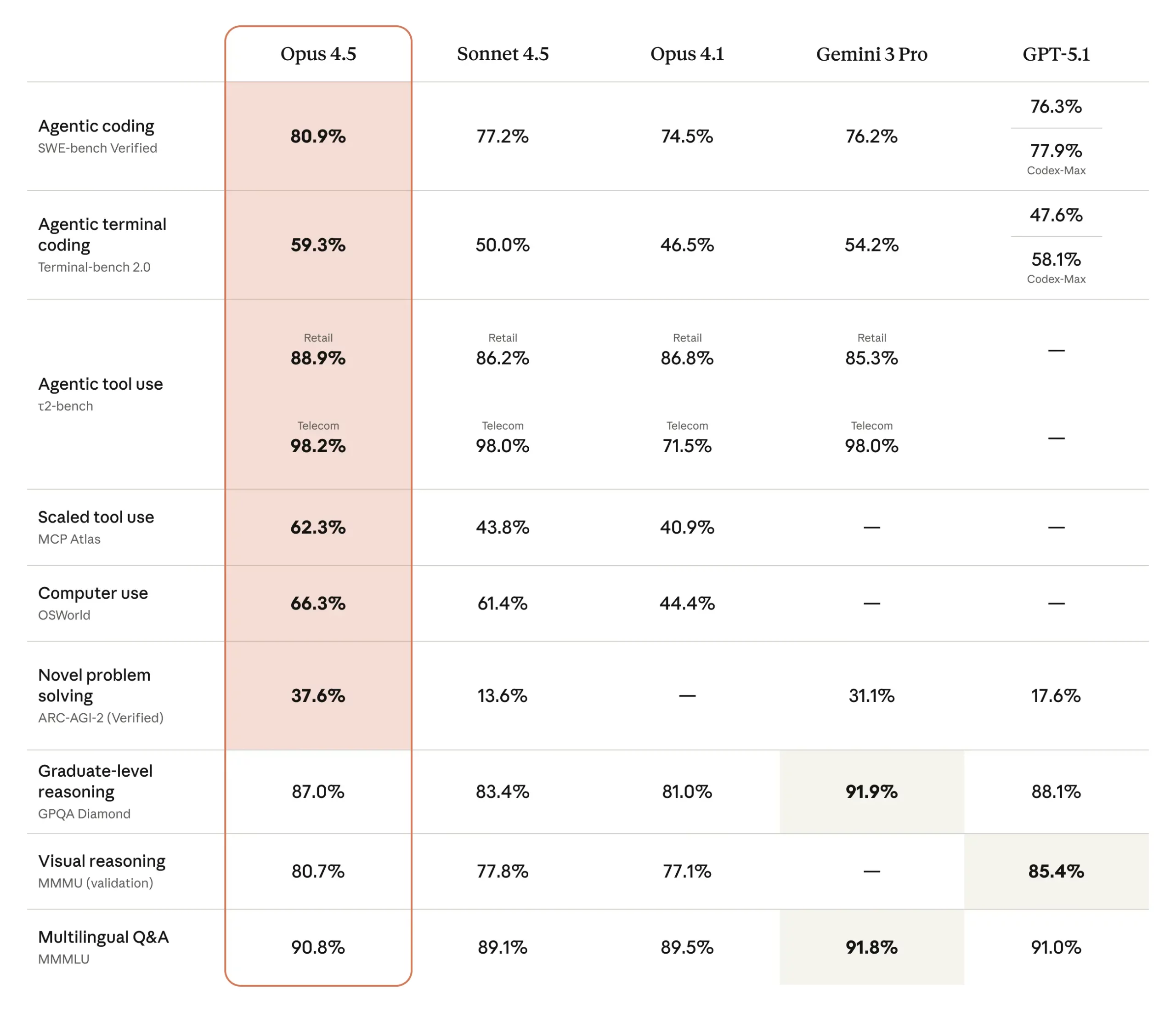

- Performance: Opus 4.5 exceeds 80% on SWE‑Bench verified and shows step‑change gains in vision, coding and multi‑step reasoning.

- Integrations: Native Chrome and Excel integrations bring AI actions into two of the most common productivity surfaces – available to paid tiers.

- Cost & efficiency: Tool‑use framework claims ~85% token usage reduction for multi‑tool workflows; Opus pricing remains premium versus smaller models.

- Governance: Model is classified Level 3 for safety – stronger guardrails, monitoring, and access controls are required.

- Operational impact: Faster time‑to‑value for automation use cases, but watcher teams must tighten monitoring, permissions, and script execution policies.

Breaking down the release: capabilities and constraints

Opus 4.5 targets professional software engineering, agentic automation, and embedded productivity workflows. Anthropic highlights: advanced code generation and debugging, vision tasks, and a “computer use” capability that lets the model interact with application APIs (Chrome extension APIs and Office Add‑ins for Excel).

Concrete numbers that matter: SWE‑Bench >80% (first to cross that threshold per Anthropic), reported task accuracy boost via their Tool Search Tool from 79.5% to 88.1%, and claims of an ~85% reduction in token usage when using the enhanced tool‑use framework for multi‑tool interactions. The model also supports much longer context windows to preserve state across long sessions — the “endless chat” use case.

Technical and cost considerations

Opus 4.5 is available through Anthropic’s Claude Developer Platform and via cloud partners (AWS Bedrock, Google Vertex AI). Anthropic’s files and tool APIs enable in‑place file manipulation and multi‑tool orchestration. Anthropic lists competitive token pricing for smaller models (example: Haiku 4.5 ~ $1 input/$5 output per million tokens), with Opus 4.5 positioned at a higher, enterprise tier — expect a significant step up in per‑token cost for production workloads.

Performance is pitched for low‑latency interactive work (browser and spreadsheet agents), but real costs will depend on volume, context size, and how much of the workflow runs on Opus vs cheaper models.

Safety, governance and operational risks

Anthropic classifies Opus 4.5 as a Level‑3 safety model — a signal that the model can perform powerful, agentic actions that could be misused or produce harmful automation. Enterprises must implement stricter access controls, logging, script approval flows, and sandboxing for any auto‑execution in Chrome or Excel.

Key risks to mitigate: unauthorized script execution in spreadsheets, browser‑level data exfiltration during web automation, prompt injection across long contexts, and over‑reliance without human‑in‑the‑loop checks for finance, legal, and security scripts.

Competitive context — when Opus 4.5 makes sense

Compared with contemporary offerings (OpenAI GPT‑4.x and Google models), Opus 4.5’s differentiators are integrated tool use, longer persistent memory, and a strong safety narrative. If your workflows require in‑app automation (filled forms, spreadsheet scripts, cross‑tab research), Opus 4.5 is compelling. If you need lower cost at scale for simpler prompts, smaller models or specialized toolchains remain more economical.

Recommendations — who should act and next steps

- Product leaders (AI/Automation): Pilot Opus 4.5 for key agentic workflows in Chrome and Excel with strict limits — run a 30-60 day POC on a non‑production dataset to validate accuracy, cost, and control requirements.

- Security & GRC: Require script review gates, audit logging, and runtime sandboxing before any auto‑execution capability is enabled. Update data governance to address browser and spreadsheet surface risk.

- Platform/Infra teams: Plan for hybrid consumption — route high‑volume, low‑risk prompts to cheaper models and reserve Opus for high‑value automation. Budget for increased token spend and monitoring.

- Legal/Compliance: Reassess third‑party data sharing and export controls for browser‑based scraping and Excel file movement; ensure retention and disclosure policies map to new integration behaviors.

Bottom line

Anthropic’s Opus 4.5 advances agentic AI practicality by combining stronger coding/vision performance with direct Chrome and Excel hooks. That reduces time‑to‑value for automation but raises governance obligations and cost tradeoffs. For enterprises, the right approach is targeted pilots, tightened controls, and a consumption strategy that balances Opus’s strengths against cheaper alternatives for routine work.